Build an in-product agent with LangGraph & MCP server

This guide walks you through a concrete in-product agent example that uses a Kapa Hosted MCP server.

The goal is not to give you a fully production-ready agent, but to help you understand how you can build one.

- A reasoning model that decides what to do.

- Native tools that talk to your product (e.g. billing, teams, settings).

- A Kapa MCP server that provides context from your knowledge sources.

The example lives in this repo: kapa-ai/langchain-agent-example

It uses LangChain’s LangGraph for orchestration and OpenAI's GPT-5.1 reasoning model, but you can swap this for any agent framework (or none) and any reasoning model that supports tools.

Prerequisites

To get the most out of this example you need:

- A Kapa project with a Hosted MCP server configured (for in-product agents, use API key authentication)

- Some knowledge sources connected to that project

- An OpenAI API key

- Docker or a local Python environment

If you don’t have a Hosted MCP server yet, follow the steps in Hosted MCP server, then come back here.

1. Clone and run the example

First, clone the repository and create your .env file:

git clone https://github.com/kapa-ai/langchain-agent-example.git

cd langchain-agent-example

cp env.example .env

Edit .env with your own values:

OPENAI_API_KEY=sk-your-openai-api-key

KAPA_MCP_SERVER_URL=https://your-project.mcp.kapa.ai

KAPA_API_KEY=your-kapa-api-key

PRODUCT_NAME=My Awesome Product

KAPA_MCP_SERVER_URLandKAPA_API_KEYcome from your Hosted MCP integration in Kapa.

Now run the example.

- Run with Docker

- Run locally

docker compose run --rm agent

python main.py

python -m venv venv

source venv/bin/activate # On Windows: venv\Scripts\activate

pip install -r requirements.txt

python main.py

You should see a simple CLI chat for “My Awesome Product Assistant”.

2. Try a few questions

Once the agent is running, start by asking a few questions that hit different tools:

- “What plan am I on?” → exercises the subscription tool

- “Who’s on my team?” → exercises the team tool

- “How do I set up webhooks?” → exercises the Kapa MCP docs tool

For simple questions, the agent will usually call a single tool and answer.

For more complex questions, it can combine multiple tool calls with several rounds of reasoning before it responds.

Here’s an example that uses both product data and information from the documentation in one answer.

Example: mixing product data + docs

You: We keep hitting 429 errors on webhooks. Are we on the wrong plan?

🧠 The agent thinks:

This sounds like a product question that involves both plan limits and webhook docs.

I should first see what the docs say about 429 errors on webhooks.

🔧 Calling tool: search_my_awesome_product_knowledge_sources

query: webhook 429 rate limit

✓ Tool completed

📎 The docs say (snippet):

"If you receive 429 responses for webhooks, it means you've exceeded your plan's rate limit.

Starter: 100 requests/minute

Pro: 300 requests/minute

Enterprise: 1000 requests/minute."

To know which limit applies here, I need to check the user's subscription plan.

🔧 Calling tool: get_subscription_info

✓ Tool completed

plan_name: Pro

status: active

seats_used: 8

seats_total: 10

billing_cycle: annual

features: [Unlimited projects, Advanced analytics, Priority support, Custom integrations, API access, SSO authentication]

🧠 The agent now knows:

- You're on the Pro plan

- For Pro, the docs say the webhook limit is 300 requests/minute

💬 Answer

You're on the **Pro** plan. According to the docs, Pro limits webhook deliveries to **300 requests per minute**.

Your recent usage is exceeding that, which is why you're seeing 429 errors. You have a few options:

- Reduce webhook volume (for example, batch events or filter which events you send)

- Add backoff / retry logic on your side

- Upgrade to a plan with higher webhook limits

I can also show you the docs section that explains these limits in more detail if you'd like.

3. Understand the pattern

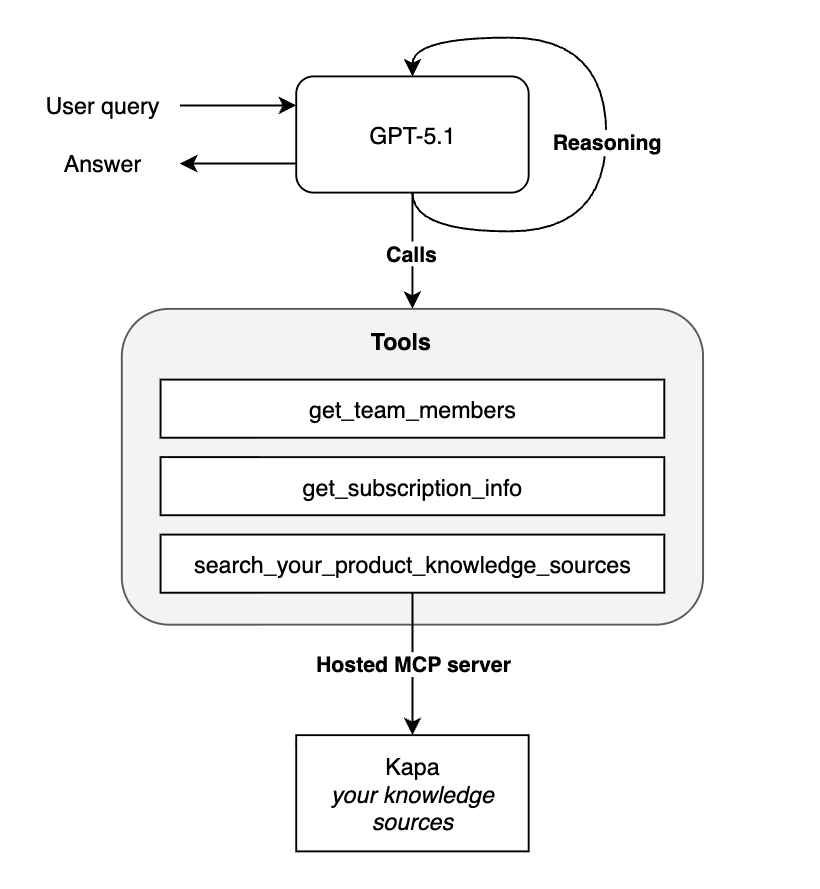

At a high level, the in-product agent looks like this:

The reasoning model (GPT-5.1) receives a user query, thinks through what to do, and then calls one or more tools:

get_team_members– example native tool that reads some product dataget_subscription_info– example native tool that reads account/billing datasearch_your_product_knowledge_sources– Kapa MCP semantic search over your documentation and other knowledge sources

In this repo, the first two are dummy tools — they just simulate calling your backend. In your own product, these tools could do anything that makes sense: creating resources, running jobs, updating settings, querying analytics, or talking to any internal API or database.

The agent follows a ReAct (Reasoning + Acting) loop:

- Reason — Analyze the situation and decide what to do next

- Act — Call one or more tools

- Observe — See the results

- Repeat — Go back to step 1 if more information is needed

- Respond — Generate a final answer once satisfied

This loop is flexible: the agent might call one tool and respond immediately, or it might chain several tool calls with reasoning steps in between. It decides dynamically based on what it learns from each tool result.

Agent code setup (model + tools + MCP)

In src/agent.py, create_in_product_agent wires everything together:

from langchain.agents import create_agent

from langchain_openai import ChatOpenAI

from langchain_mcp_adapters.client import MultiServerMCPClient

from src.tools.subscription import get_subscription_info

from src.tools.team import get_team_members

SYSTEM_PROMPT_TEMPLATE = """You are an intelligent assistant embedded in {product_name}.

You have access to three types of tools:

- `get_subscription_info`: Use this when users ask about their plan, billing, pricing, seat limits...

- `get_team_members`: Use this when users ask about who is on their team, roles, permissions...

- `search_{product_name}_knowledge_sources`: Use this for ANY questions about how to use {product_name}...

... (guidelines omitted for brevity) ...

"""

async def create_in_product_agent(

mcp_server_url: str | None = None,

mcp_api_key: str | None = None,

product_name: str | None = None,

model_name: str = "gpt-5.1",

):

# Read config from env if not passed in

mcp_server_url = mcp_server_url or os.getenv("KAPA_MCP_SERVER_URL")

mcp_api_key = mcp_api_key or os.getenv("KAPA_API_KEY")

product_name = product_name or os.getenv("PRODUCT_NAME", "<Your Product>")

if not mcp_server_url or not mcp_api_key:

raise ValueError("KAPA_MCP_SERVER_URL and KAPA_API_KEY must be set.")

# 1) Start with native tools

tools = [get_subscription_info, get_team_members]

# 2) Add tools from the Hosted MCP server

mcp_client = MultiServerMCPClient(

{

"kapa": {

"transport": "streamable_http",

"url": mcp_server_url,

"headers": {"Authorization": f"Bearer {mcp_api_key}"},

}

}

)

mcp_tools = await mcp_client.get_tools()

tools.extend(mcp_tools)

print(f"Loaded {len(mcp_tools)} tool(s) from Kapa MCP server:")

for tool in mcp_tools:

print(f" → {tool.name}")

print()

# 3) Build the system prompt with your product name

system_prompt = SYSTEM_PROMPT_TEMPLATE.format(product_name=product_name)

# 4) Configure the reasoning model

model = ChatOpenAI(

model=model_name,

reasoning={

"effort": "medium",

"summary": "detailed",

},

)

# 5) Create the agent – this runs the ReAct loop for you

agent = create_agent(

model=model,

tools=tools,

system_prompt=system_prompt,

)

return agent

- The system prompt guides the agents behavior.

toolscombines your native tools and the MCP tools loaded from your Hosted MCP server.create_agenthandles the ReAct loop (Reason → Act → Observe → Repeat → Respond).

Example native tool: subscription info

The subscription tool in src/tools/subscription.py is a simple example of how a native tool is shaped. In production, this would call your real billing API instead of returning mock data:

from datetime import datetime, timedelta

from typing import Optional

from langchain_core.tools import tool

from pydantic import BaseModel, Field

class SubscriptionInfo(BaseModel):

"""Schema for subscription information."""

plan_name: str = Field(description="Name of the subscription plan")

status: str = Field(description="Current status of the subscription")

seats_used: int = Field(description="Number of seats currently in use")

seats_total: int = Field(description="Total seats available in the plan")

billing_cycle: str = Field(description="Billing cycle (monthly/annual)")

current_period_end: str = Field(description="End date of current billing period")

monthly_price: float = Field(description="Monthly price in USD")

features: list[str] = Field(description="List of features included in the plan")

MOCK_SUBSCRIPTION = SubscriptionInfo(

plan_name="Pro",

status="active",

seats_used=8,

seats_total=10,

billing_cycle="annual",

current_period_end=(datetime.now() + timedelta(days=45)).strftime("%Y-%m-%d"),

monthly_price=49.99,

features=[

"Unlimited projects",

"Advanced analytics",

"Priority support",

"Custom integrations",

"API access",

"SSO authentication",

],

)

@tool

def get_subscription_info(user_id: Optional[str] = None) -> str:

"""

Get information about the current user's subscription plan.

Use this when users ask about subscription, billing, plan features, or seat availability.

"""

sub = MOCK_SUBSCRIPTION

return f"""## Subscription Information

**Plan:** {sub.plan_name}

**Status:** {sub.status.capitalize()}

### Usage

- **Seats:** {sub.seats_used} / {sub.seats_total} used

- **Available seats:** {sub.seats_total - sub.seats_used}

### Billing

- **Cycle:** {sub.billing_cycle.capitalize()}

- **Price:** ${sub.monthly_price}/month (${sub.monthly_price * 12:.2f}/year)

- **Current period ends:** {sub.current_period_end}

### Included Features

{chr(10).join(f'- {feature}' for feature in sub.features)}

"""

This tool is deliberately simple, but it shows the pattern: Wrap your existing backend capability (billing, teams, analytics, etc.) in a tool. Describe clearly when the agent should use it.

4. Next steps: adapt this to your product

Once you’ve run the example and understand the flow, you can start adapting it to your own stack.

Keep the pattern

The core idea stays the same:

- A reasoning model or agent framework (LangChain, LangGraph, OpenAI agents, your own orchestration)

- Your native tools that talk to your backend

- The Kapa MCP retrieval tool for docs and guides

You can swap out the framework and model as long as they support tool calling.

Replace and extend the example tools

The example tools (subscription.py, team.py) are just placeholders that happen to read subscription and team data.

In your product, you can replace or extend them with tools that:

- Read data (e.g. resources, runs, logs, analytics)

- Change state (e.g. create dashboards, restart jobs, update settings)

- Orchestrate workflows (e.g. trigger deployments, run reports)

Make sure you:

- Pass user/tenant context into tools (

user_id, org/tenant IDs, permissions) - Enforce auth/authorization the same way you do in your normal APIs

Embed the agent into your app

The repo uses a CLI to keep things simple. In a real app you’ll:

- Move the agent call into a backend endpoint

- Send the user’s message + context from your frontend

- Stream the agent’s response back into your in-product chat UI

The MCP integration doesn’t change: it remains a backend-only HTTP client that talks to your Hosted MCP server using your project API key.