How Mapbox, Monday.com and CircleCI Use In-App Product AI Assistants

Many forward-thinking technical companies like OpenAI, CircleCI, Temporal, Mixpanel, and Docker are adopting Large Language Models (LLMs) trained on their documentation to improve their developer experience.

In addition to enhancing documentation, these companies are increasingly integrating AI bots directly into their products as AI assistants. This approach allows users to get answers to their questions within the product itself, reducing the need to consult documentation or open support tickets.

This approach offers several key benefits:

- Instant, in-product support: Users can get help without leaving their workflow.

- Reduced support burden: Many common questions can be answered automatically.

- Improved documentation: Insights from user queries help identify areas for improvement.

Let's look at how some leading companies are implementing this technology to enhance their user experience.

Showcase #1: CircleCI's In-Product AI Assistant

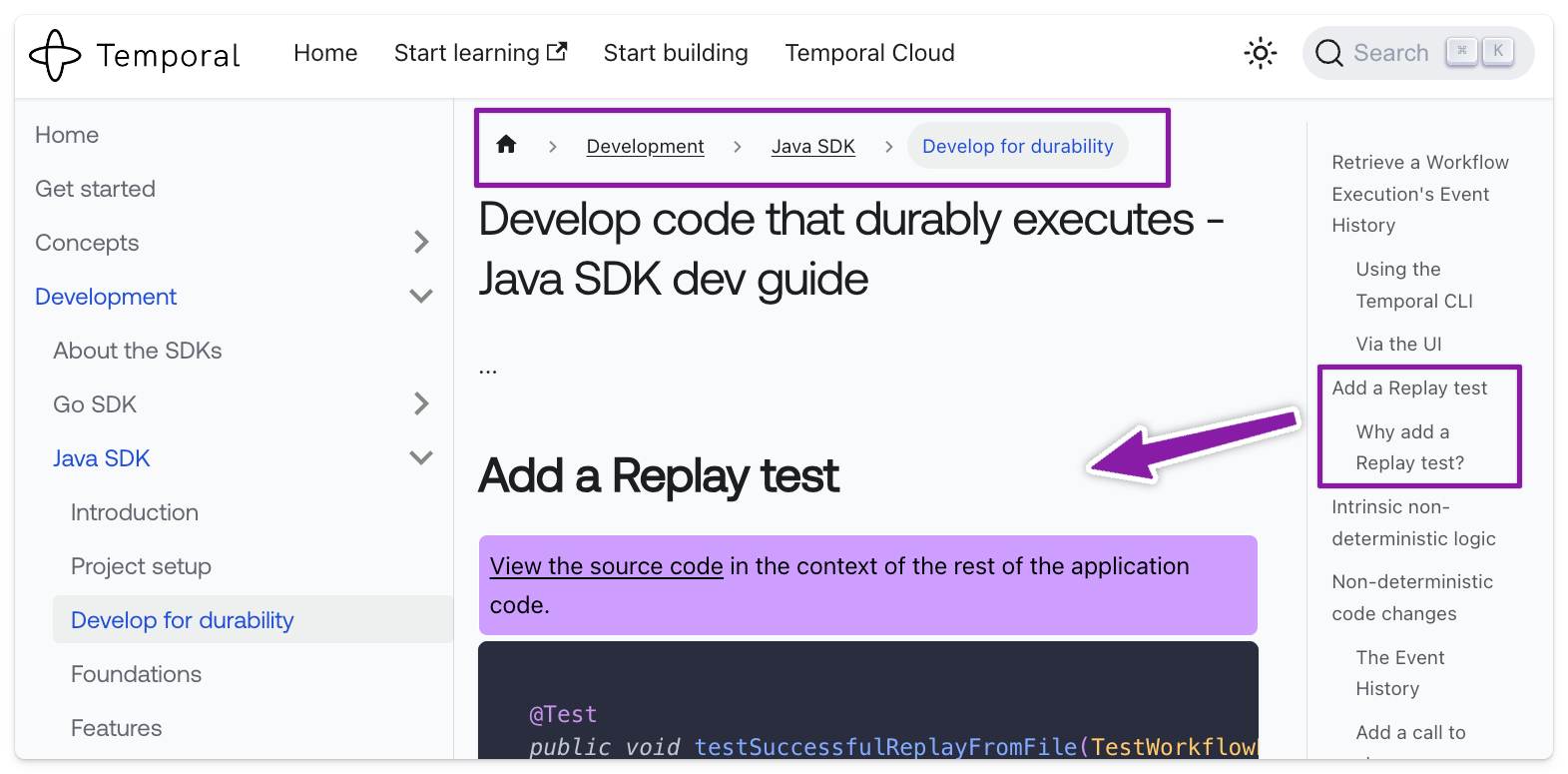

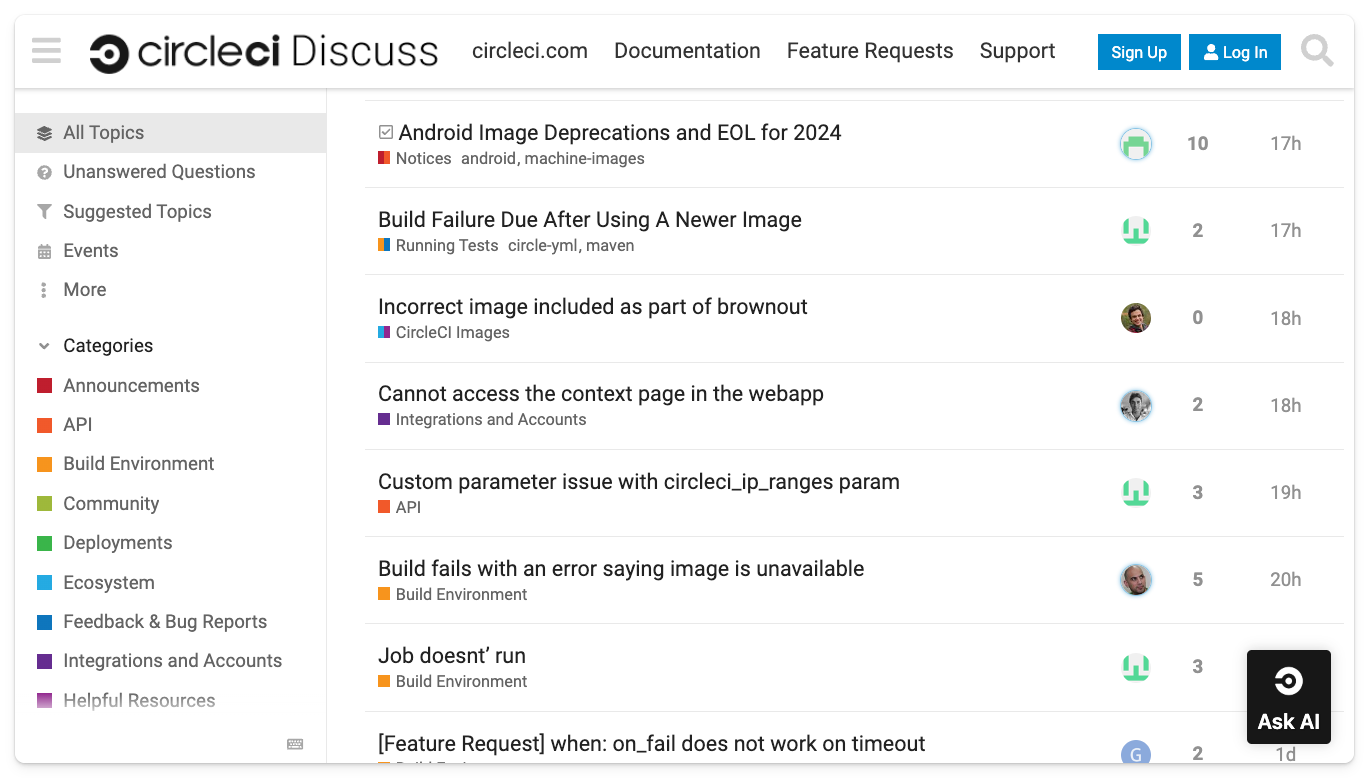

CircleCI, a continuous integration and continuous delivery platform, has implemented an AI-powered chatbot using kapa.ai with access to their comprehensive documentation, YouTube tutorials, community forums, and internal knowledge base.

By embedding the kapa.ai widget within the CircleCI application, users can get instant help while actively working on their pipelines, reducing workflow disruptions and improving efficiency.

Deployment strategy: Custom "Ask AI" drop-down button that opens the kapa widget on the CircleCI Web App.

Showcase #2: Monday.com Developer RAG AI Assistant

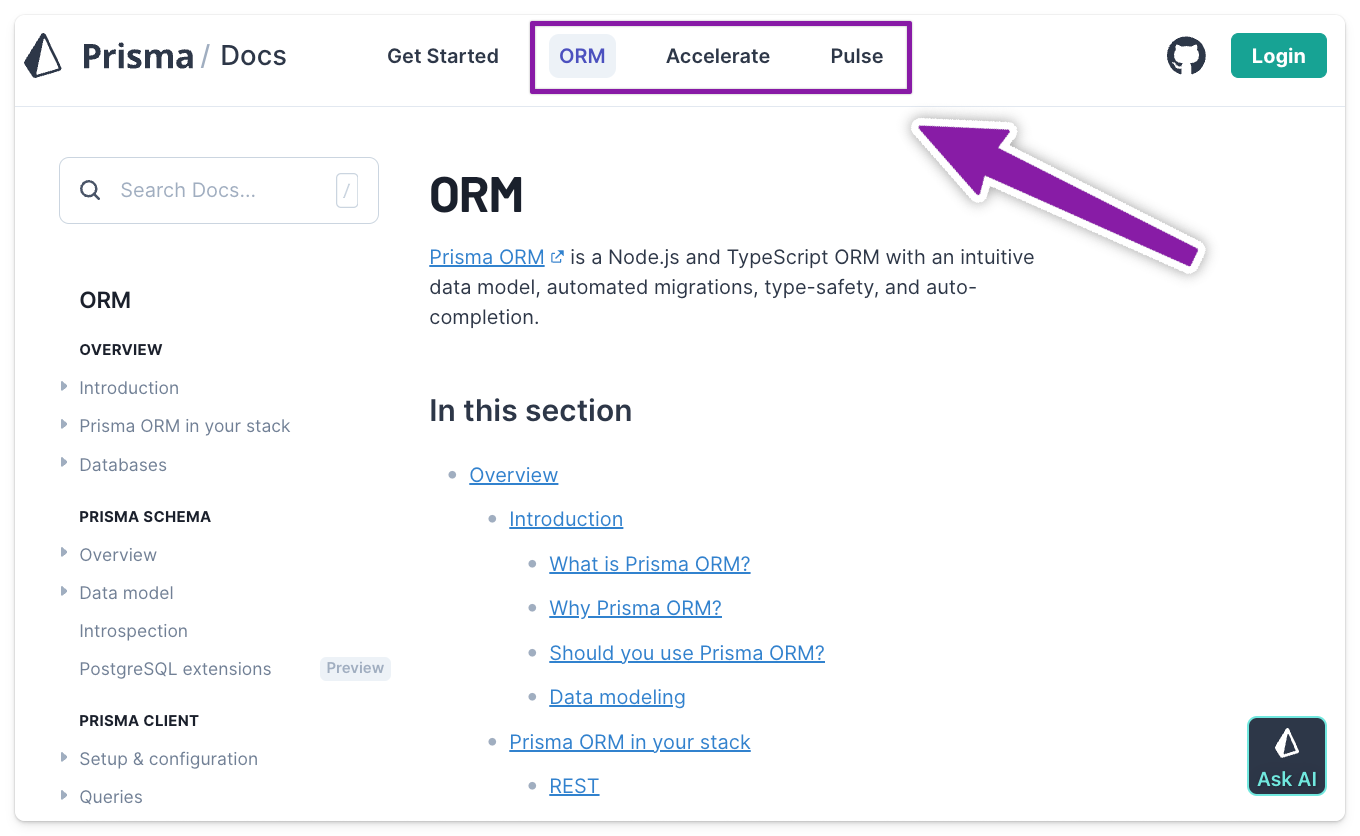

Monday.com, a work operating system (Work OS) platform, has implemented an AI-powered chatbot with access to their developer documentation and API specifications.

The Monday.com team recently embedded the kapa.ai chatbot within their developer center, providing real-time support and enhancing the overall developer experience. This AI assistant saves developers an estimated 15-30 minutes per query, while also helping identify documentation gaps and offering multilingual support.

"At monday.com, we embedded the AI bot within our developer center, minimizing context switches and boosting productivity." - Daniel Hai, AI & API Product Manager @ Monday.com

Deployment strategy: Custom "Ask AI" menu button that opens the kapa widget on the Monday.com Developer Center.

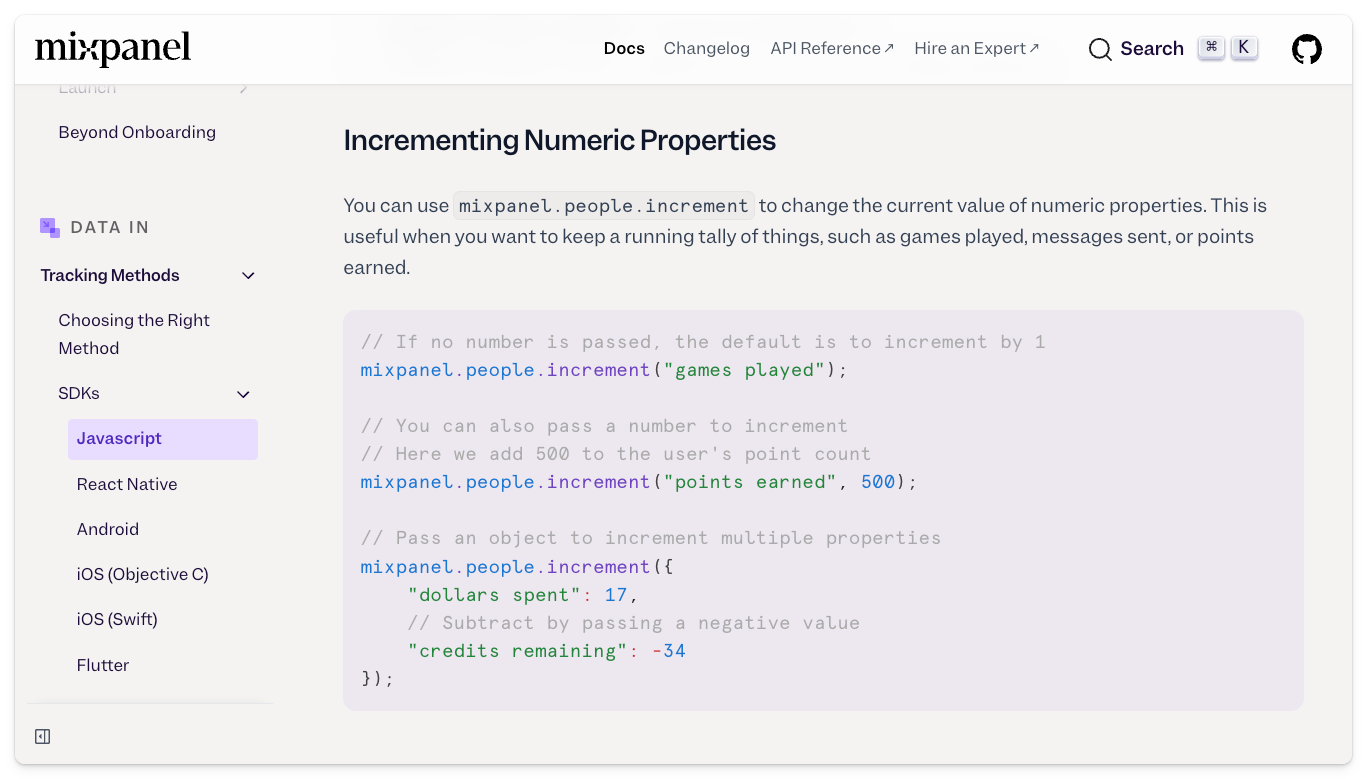

Showcase #3: Mapbox Account Page Support LLM

Mapbox, a leading provider of customizable maps and location services, has integrated kapa.ai's AI-powered chatbot directly into their account page. This implementation allows logged-in users to access immediate support without leaving their workflow.

The account page features the standard kapa.ai "Ask AI" widget in the bottom right corner, implemented using an off-the-shelf script tag. This widget provides users with instant access to Mapbox's extensive knowledge base, including information from developer documentation, API references, and other technical resources.

This account page implementation, along with similar deployments across Mapbox's documentation and support sites, has contributed to a significant 20% reduction in monthly support tickets.

Deployment strategy: Off-the-shelf "Ask AI" chatbot that opens the kapa widget on the Mapbox Account page.

By integrating AI assistants directly into your products, you can significantly enhance user experience and reduce support burdens. If you're interested in implementing an AI assistant within your product, sign up here for a demo or reach out to the kapa team if you have questions about how to integrate AI assistants effectively.

kapa.ai is a platform designed to help developer-facing companies build AI support bots by ingesting technical knowledge from various sources and using Retrieval Augmented Generation (RAG) to provide accurate, contextual responses to user queries, ultimately improving customer experience and reducing support load. Trusted by over 100 companies, including OpenAI, Mapbox, Reddit, CircleCI, and Docker, kapa.ai is fully SOC II Type II certified.